I, too, have an experience to share regarding AI!

TLDR: The excitement around how amazing AI has become in replicating human creativity has various negative impacts, too. Here is but one: plagiarism detection.

I am coming up to the halfway mark of my doctoral studies. During my previous course, urged on by my professor, I started using Grammarly for the first time.

Grammarly suggestions have been an amazing tool to help me be more conscious of sentence structure and word choice while writing my papers.

However, to date, when writing, I *always* hand-review web version recommendations and manually change suggestions in an offline copy, as I still have a deep-rooted fear that I will be accused of plagiarism, and I have no idea what type of “fingerprint” AI leaves on my papers.

In pondering that question, for the first time today, I installed the Grammarly Mac app, and I am using it right now to help correct my punctuation and recommend better sentence structures as I post.

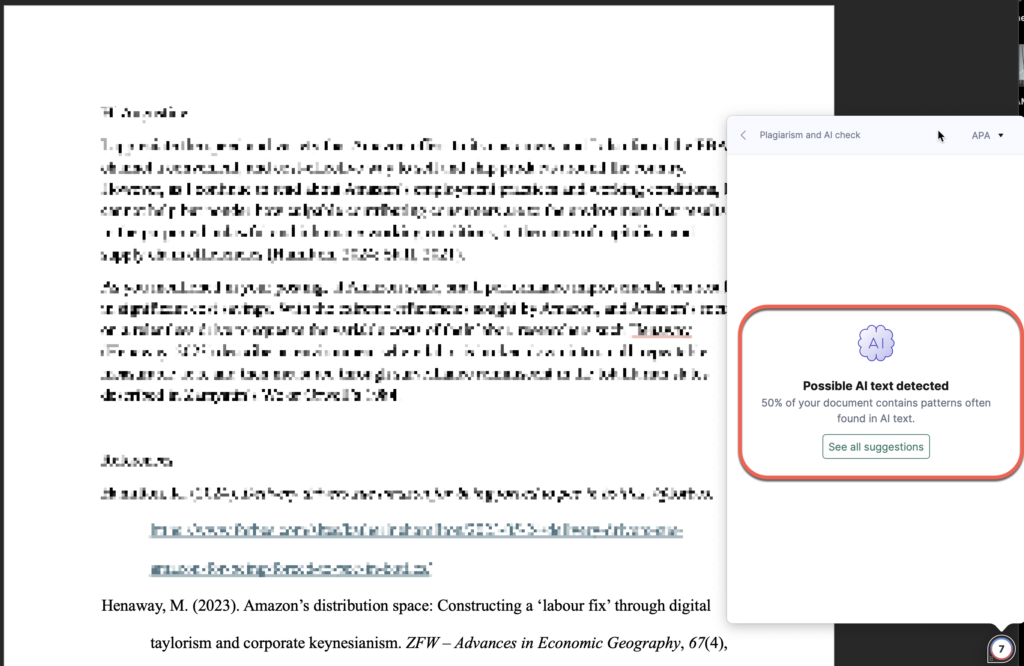

The genesis of this post: a few minutes ago, I decided to open the newly installed Grammarly add-in and ask it to detect plagiarism for the paper I began writing by hand, from my head. See the attached image below.

Apparently, 50% of the ideas that came from my brain, down the electrons and muscles of my fingers, and onto the digital paper of Microsoft Word show signs of being plagiarized through the use of Artificial Intelligence.

So, I begin to think: what if a university, driven by the societal pressures of adoption, picks an arbitrary KRI/KPI (we do this ALL the time in the real world) to say that if AI thinks 60% of my paper is AI, I will get accused of plagiarism?

Should I go back to not using any form of AI so I can in good conscience declare that I *never* use AI, or do I move forward and hope that universities and professors are themselves learning about the flaws and limits of AI even amongst all the amazing new benefits?

The irony is not lost on me that Grammarly is telling me I should rewrite much of this post for simplicity and clarity. When I look at the suggestions, it is probably correct.

However, my version sounds more “Human,” even if more flawed, and perhaps, just perhaps, by ignoring those great suggestions, my post will display fewer signs of the fingerprint of AI.

[NOTE: Grammarly reminded me of the sometimes hotly-debated statement that the comma goes inside the quote, not outside!].

Soon, I may find that I need to dance the dance with Grammarly just to change my human-written paper to avoid the appearance of plagiarism to those professors and universities who are, like everyone else, struggling to understand where AI fits into this brave new world. I can see a vicious cycle.

What tangled webs we weave.

p.s. Apparently, Grammarly originally thought there was 14% plagiarism in this post. I changed a few sentences, and now it says 0%. Maybe it is so low because I left all my poorly structured sentences in place. 😂